The brain is waking and with it the mind is returning. It is as if the Milky Way entered upon some cosmic dance. Swiftly the head mass becomes an enchanted loom where millions of flashing shuttles weave a dissolving pattern, always a meaningful pattern though never an abiding one; a shifting harmony of subpatterns.

Charles S. Sherrington, Man on his Nature, 1940

When Sherrington described the human brain as the enchanted loom in the mid-20th century, the Jacquard loom featured in his prose had been one of the most complex mechanical devices ever invented for over a hundred years. It used a system of punched cards that encoded complex patterns to be weaved into textile. The punched cards devised for the Jacquard loom would later find wider use in early computers, also programmed with punchcards. This is where software was born.

Given the place the Jacquard loom held in the history of mechanical craftsmanship, it’s no surprise that Sherrington imagined the human brain, still the most complex system we know of in the universe, as a system of looms weaving ephemeral patterns into memories and cognition.

Decades have passed, and the complexity of microprocessors used in Internet-scale computing systems dwarf the complexity of even the largest Jacquard patterns. Today, we imagine usurping the capabilities of the human brain with software instead of looms.

We’ve gotten closer, but I’m not sure that computers as we know it will get us there yet. Inventing the computer and the deep neural network may still be one of the first few steps in replicating the magic of the enchanted loom, and in this post, I want to explore the future that I imagine in our steps forward.

A new kind of computer

I firmly believe that deep neural networks represent a fundamentally different kind of tool than computers as we’ve known them since their invention.

Programs that run on conventional computers that aren’t neural nets – a class of programs I’ll call “classical computing” – are all built on the same fundamental conceptual foundation: the ability to emulate Turing machines efficiently.

The Turing machine is an abstract model of what it means to “compute” something. The model has a small machine working with a limited set of rules along an infinite tape of “memory” on which it can read and write data, and computer scientists consider the Turing machine the de-facto model for the capability that a computer has. This means that any classical program we can write for a computer can be re-written as a program fed into a Turing machine. All modern computers, from your smartphone and laptop to servers in Google’s datacenters to the microcontroller in your thermostat, are built on this foundational capability of emulating a Turing machine, and thus being able to solve the same problems a Turing machine can theoretically solve.

This is the reason that a program written for one computer can be safely re-written for another computer, and an algorithm designed for one programming language can be re-written in another language. All of these tools and computers encode the same powerful problem-solving power, which is the computational capability that a Turing machine represents. This capability includes things like efficient arithmetic, simulation, and working with strings of data, and these capabilities of the Turing machine turn out to unlock a whole world of power for users of computers – the power we enjoy today on our personal devices.

The Turing machine encodes a particular class of problems that classical computers can solve. But there are problems that are intractable for even the fastest, most efficient Turing machines, problems like factoring large prime numbers, infinite-precision decimal arithmetic, and the traveling-salesman problem. We depend on the intractability of some of these problems for the security of modern cryptography.

But just because these problems are impenetrable to computers based on Turing machines, doesn’t mean the are impossible problems. For example, a classical computer can’t work with infinite-precision decimal numbers, because they have finite memory. But we humans can trivially work with infinite-precision quantities, by using geometry. If you wanted me to tell you the exact value of the golden ratio, an irrational number, I could construct a geometric representation for you with a pencil, a ruler, and a compass, and you could measure it to any desired level of precision. Turing machine-based computers are powerful, but their power has limits.

Classical computers, like the one you have in your pocket or the one on which you’re reading this post, give us the computational capability to solve a specific class of problems. There are other kinds of computers that unlock the ability to solve other kinds of problems that are intractable for the computers we’re used to.

Astute readers will already know one such example of a new kind of computer: the quantum computer. Quantum computers don’t operate on the Turing machine model. Instead, the equivalent concept – the “quantum Turing machine” – encodes the total computational power of quantum computers in a mathematical model. Quantum computers give us a new kind of computational capability, namely the ability to run algorithms over a whole range of possible values, rather than a single value as in a classical computer. Using this new capability, we can attack new kinds of problems previously intractable for a Turing machine-based computer, like factoring large prime numbers, which is possible on a quantum computer using Shor’s algorithm.

In summary, there exist different computational capabilities, defined by the kinds of problems we can solve with each particular model of computing. Two examples are classical computers, emulating a Turing machine, and quantum computers, operating on a state space of values rather than single pieces of data.

Deep neural nets, I think, represent a third kind of computational capability.

The Turing machine for cognition

Training and making predictions with a deep neural net can run on a classical computer (as they almost always do today), but the operations that comprise computation with a neural net aren’t the atoms of Turing machines, but something different. They’re large, massively multi-dimensional matrix multiplications calculating weights and differentials across huge numbers of values at once in uniform ways. This fundamentally different computation model is what makes DNNs so well-suited to run on parallel processors like GPUs. But beyond that simple acceleration, we’ve been inventing new computing hardware for deep neural nets for a while now, better suited to the kinds of computational work required for a neural net. We’ve invented new kinds of processors (tensor processors), new kinds of data representations (bfloat16, reduced-precision floats), and alternative ways of writing programs (dataflow graphs) that are better suited to this new kind of computational power.

I think this suggests that deep neural nets represent a fundamentally new computational capability, different from classical computing that run sequentially on CPUs and efficiently emulate Turing machines. I’ll call this new capability cognitive computing to contrast with classical computing and quantum computing. Deep neural network-based software systems aren’t about emulating Turing machines or running algorithms designed for classical computers. Instead, they give computers the ability to solve new kinds of problems that resemble cognition.

Like quantum computers, DNNs open up a new, previously intractable class of problems for computers. These problems generally look like cognitive or perceptive tasks, things like learning the rules of an unknown system, recognizing patterns in data, breaking down images and text into sensible parts, and making inferences based on past patterns.

“But Linus,” you might object to this idea, “if deep neural nets really represent a new kind of computational capability, how can we run them on classical computers?”

Excellent question.

Beyond vacuum tubes

Even though cognitive and quantum computing represent different kinds of computational power, they aren’t completely inaccessible to each other. For example, it’s possible for fast classical computers, like the CPU in your laptop, to simulate a small quantum computer, albeit very slowly and inefficiently. But because a classical computer simulating a quantum computer has to keep track of exponentially more data, large quantum problems for which quantum computers are useful are impractical to simulate on a classical computer. (And by “impractical” I mean that constructing a computer capable of it would require more atoms that exist in the universe.)

When we run cognitive algorithms on deep neural nets on GPUs and tensor processors today inside our classical computers, we’re doing something akin to simulating quantum computers inside classical ones. We’re taking a massive hit in efficiency, for the benefit that we already know how to work with classical computers! But to really take advantage of the best that DNNs can offer humanity, we’ll need more efficient and DNN-friendly computational substrates.

Computing capabilities, computing substrates

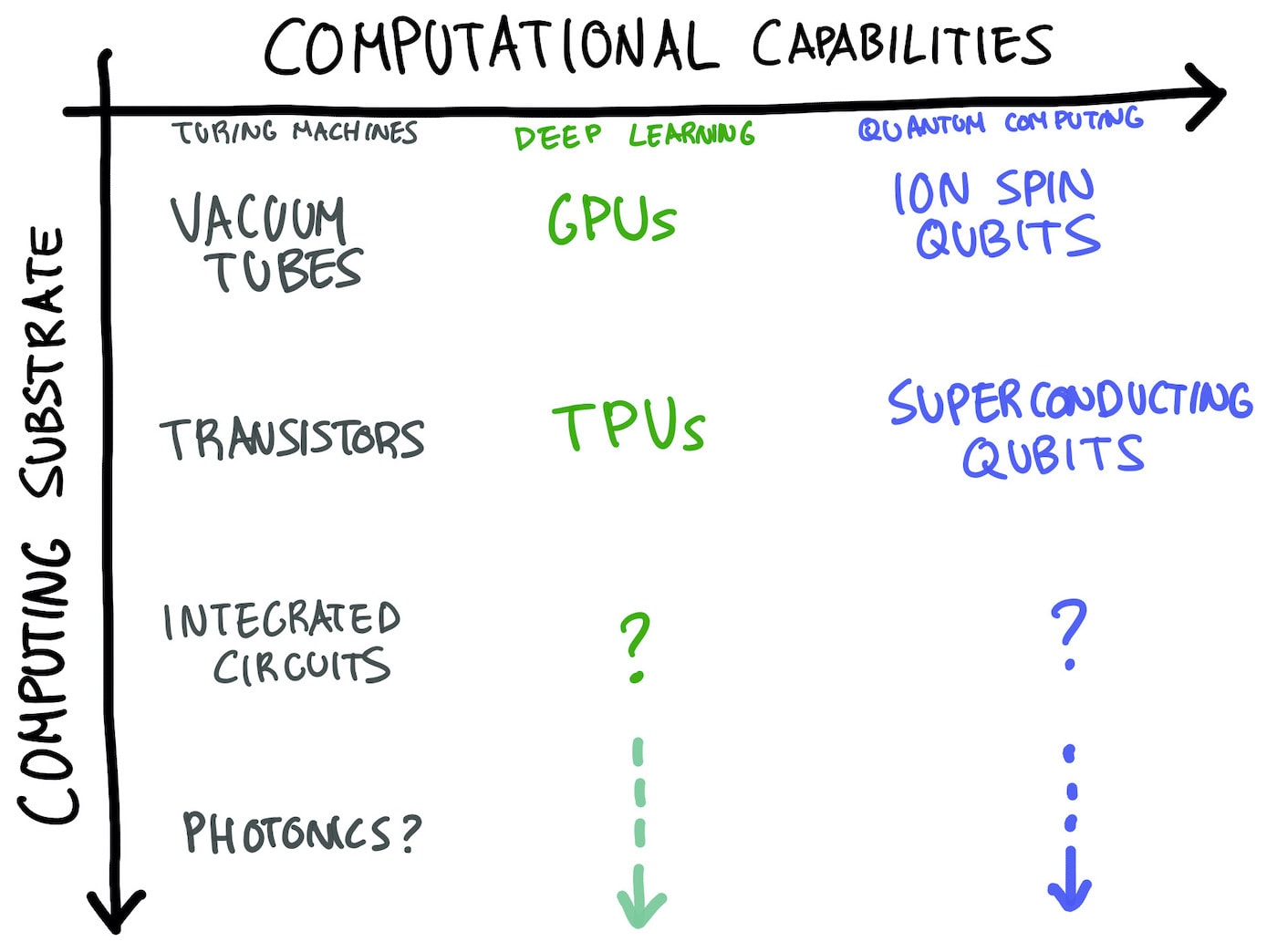

In the possibility space of computers, there are two axes: capabilities and substrates. A computational capability describes the kinds of problems that a computer can solve: quantum algorithms, deep learning algorithms, basic arithmetic, and so on. A computational substrate is the physical material on which the algorithm runs: paper-and-pen, vacuum tubes, microprocessors, ion atoms, GPUs, and so on.

For each computational capability, there are many, many substrates on which we can execute the same algorithms. The first computers that ran on vacuum tubes can theoretically run the same programs that my iPhone 12 runs today, even though they use different substrates under the hood, because they implement the same computational capability, namely the Turing machine.

When any new computational capability is introduced, we usually take a few tries to discover the right substrate that’s efficient, fast, and cheap to manufacture. For classical computers, this brought us from punched cards and vacuum tubes to flash memory and transistors in laser-etched silicon. For quantum computers, there is ongoing research to discover materials that make more efficient qubits, like trapped ions and superconducting materials.

For the cognitive computation power that deep neural nets represent, our current solution – silicon chips like GPUs and tensor processors strung together into giant datacenters – are a first step, but I think they’re nowhere near as efficient or cheap as it could be.

Firing up a multi-million dollar server farm to train a cutting-edge natural language model like GPT-3 feels a lot like firing up a building-sized computer in the early days of classical computing. We didn’t get to smartphones by simply shrinking vacuum tubes and punchcards; we got here because we found new substrates for the same computational power, namely laser-etched transistors on silicon. I imagine, in a hundred years, if we have GPT-10 in our pockets, it won’t run on GPUs and TPUs, but a new material entirely.

I believe there exist materials that will enable smaller, faster, far cheaper and far more efficient computers that can see, think, and speak. As one example, the human brain is a powerful cognitive computer, and runs on organic materials, not silicon. If we’re still doing the work of cognitive computing on silicon in 100 years, it would probably signal a tragic stagnation in humanity’s ability to discover better ways to build computers.

Discovering magic

If we treat cognitive computing as a new computational power that unlocks a fundamentally new class of previously intractable problems, we can imagine much more exciting futures for computer technology at large.

For one, I think it opens up the enchanting possibility that there is not just the one “computer” or the one “Turing machine” that lets us solve just one kind of hard problems, but a vast universe of different computational power and substrates on which we’ll discover ways to solve a million different kinds of hard problems. In this future, we’ll discover a problem, and match it with the kind of computing technology best fit to attack it in service of humanity.

This future of multicolored computing contains not just classical computers and cognitive computers and quantum computers, but a myriad of other computational materials and algorithms that work together to solve hard problems and answer questions that appeared impossible with previous generations of computers. Perhaps every fifty years, we’ll discover a new kind of computational power that we didn’t even realize existed before, and gift ourselves the ability to solve problems we previously dismissed as impossible.

Magic is simply technology we haven’t invented yet. Today, the best technology we have is large, planet-spanning software systems. So when we imagine engineering magic, we imagine even more complex and powerful computer systems. But the reality might be even more fantastical.

As Charles Sherrington imagined weaving the magic of artificial intelligence on a loom, and we today imagine etching superintelligence into ever-faster silicon, the next generation of engineers and thinkers will imagine encoding magic in organic molecules, transmitting magic in gravitational waves, and threading magic into streams of light.

In this long future of computing, superhuman artificial intelligence isn’t the holy grail, it’s just the next milestone. The zoo of computational capabilities is infinite. The doors have only just opened, the first few inhabitants found, but the real work is only getting started.

I share new posts on my newsletter. If you liked this one, you should consider joining the list.

Have a comment or response? You can email me.