Direct manipulation interfaces let us interact with software materials using our spatial and physical intuitions. Manipulation by programming or configuration, what I’ll call programmatic interfaces, trades off that interaction freedom for more power and precision — programs, like scripts or structured queries, can manipulate abstract concepts like repetition or parameters of some software behavior that we can’t or don’t represent directly. It makes sense to directly manipulate lines of text and image clips on a design canvas, but when looking up complex information from a database or inventing a new kind of texture for a video game scene, programmatic control fits better. Direct manipulation gives us intuitive understanding; programmatic interfaces give us power and leverage. How might we combine the benefits of both?

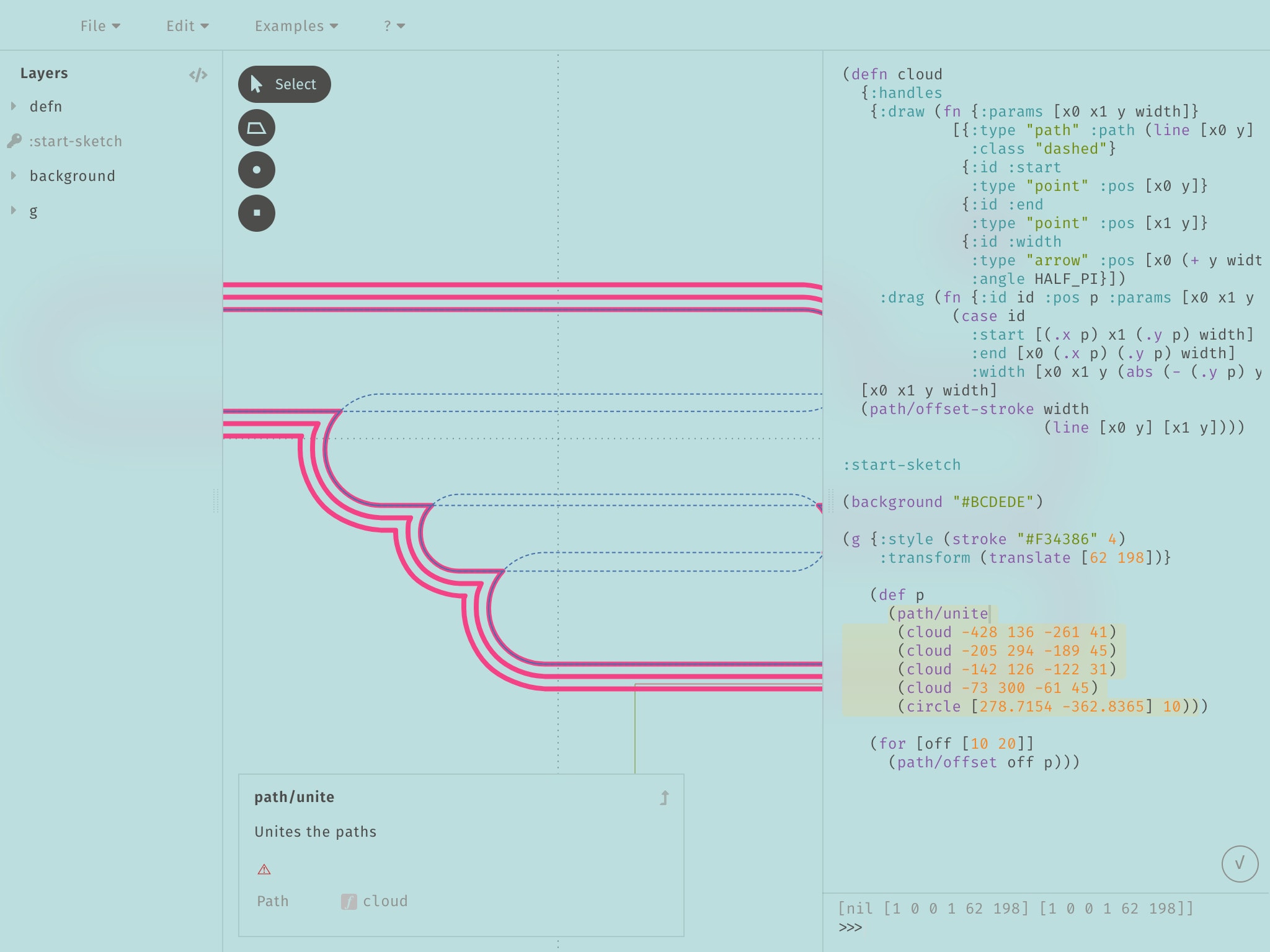

I started thinking about this after looking at Glisp, a programmatic drawing app that also lets users directly manipulate shapes in drawings generated by a Lisp program, by sort of back-propagating changes through the computation graph back into the program source code itself:

By resizing the radius of a circle on the canvas with my mouse, for example, I can change the number in the Lisp program’s source code used to compute the circle’s radius. Kevin Kwok’s G9 library does something similar: it renders 2D images from a JavaScript drawing program such that the drawings become “automatically interactive”. This is possible because, every time someone interacts with a point on the drawing, the input is backpropagated (this time, literally through automatic differentiation) through the program to modify it in a way that would produce the new desired drawing.

I really like this pattern of “push the interactions backwards through the computation graph to compute new initial states”. Its benefits are obvious for graphical programs, but also useful for more “information processing” programs. Imagine:

- Reordering pieces of a table of contents with drag-and-drop to restructure and reword a piece of writing.

- Searching for the weather, then dragging the temperature slider to 70 degrees to watch the date move forward in time, to find out when it’s going to get warm.

- Looking up the definition of a word, then tweaking the definition slightly by editing the text in-place to discover a new word that means something slightly different.

- Synthesizing a summary of an essay or meeting notes, and adding bullet points to it to edit the original document’s longform prose.

Some of this is possible today; others, like propagating edits to a summary back into source documents, requires rethinking the way information flows through big AI models. My past work on reversible and composable semantic text manipulations in the latent space of language models seems relevant here as well, and I’ve played with things like “reversible summary edits” lightly with it.

One way to think about language models is a programmatic interface to unstructured information. With that versatility and power also comes indirection and loss of control. Techniques for “backpropagating through reasoning” like this might be an interesting way to bridge the gap.

I share new posts on my newsletter. If you liked this one, you should consider joining the list.

Have a comment or response? You can email me.