I’ve long been enamored by DALL-E 2’s specific flavor of visual creativity. Especially given the text-to-image AI system’s age, it seems to have an incredible command over color, light and dark, the abstract and the concrete, and the emotional resonance that their careful combination can conjure.

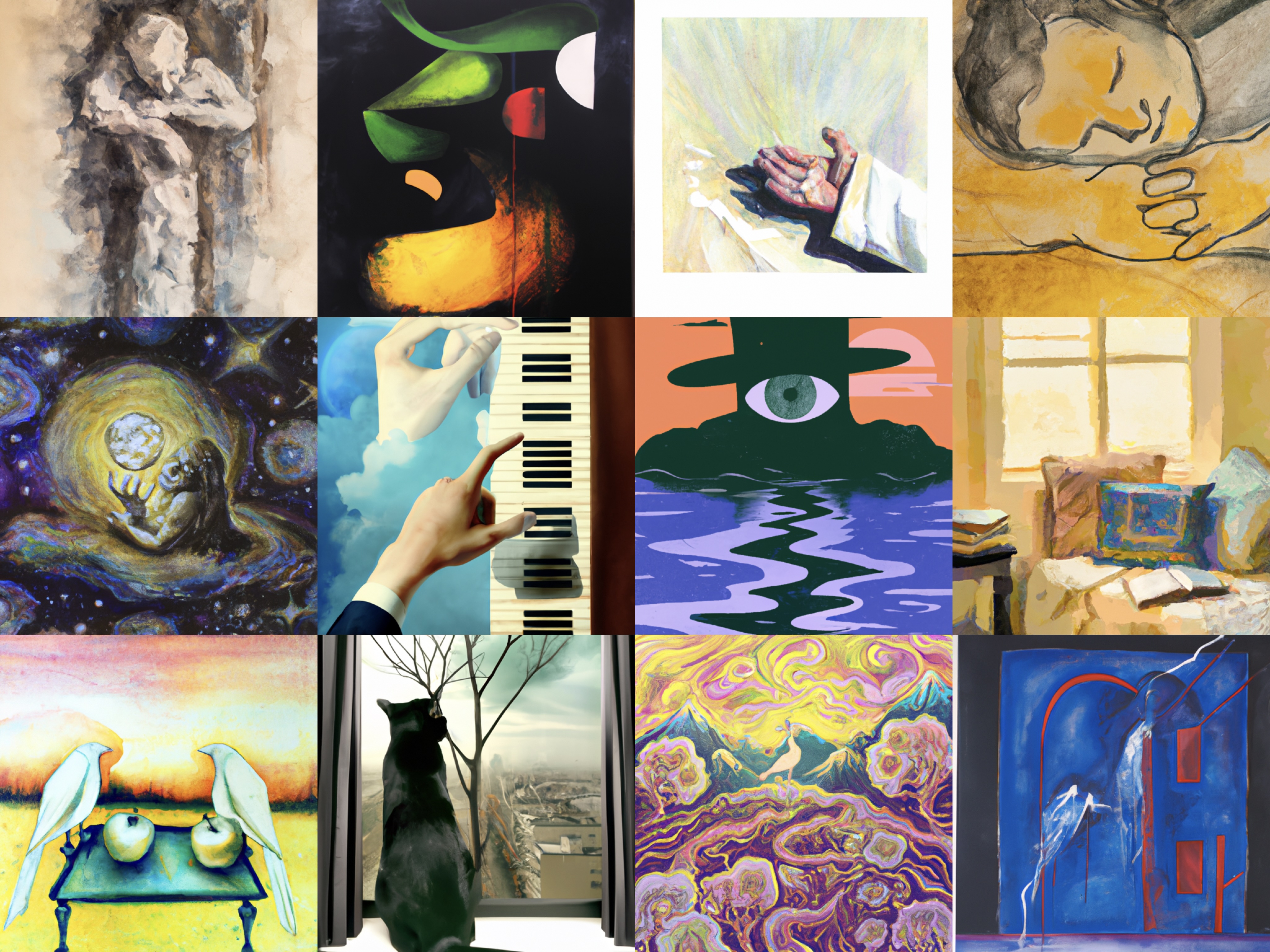

I picked these twelve images out of a much larger batch I generated with DALL-E 2 automatically by combining some randomly generated subjects with one of a few pre-written styles suffixes like “watercolor on canvas.”

Notice the use of shadows behind the body in the first image and the impressionistic use of color in the third image in the first row. I also love the softness of the silhouette in the top right, and the cyclops figure that seems to emerge beyond the horizon in the second row. Even in the most abstract images in this grid, the choice of color and composition result in something I would personally find not at all out of place in a gallery. There is surprising variety, creativity, and depth to these images, especially considering most of the prompts are as simple as giving form to metaphor, watercolor on canvas or a cozy bedroom, still life composition.

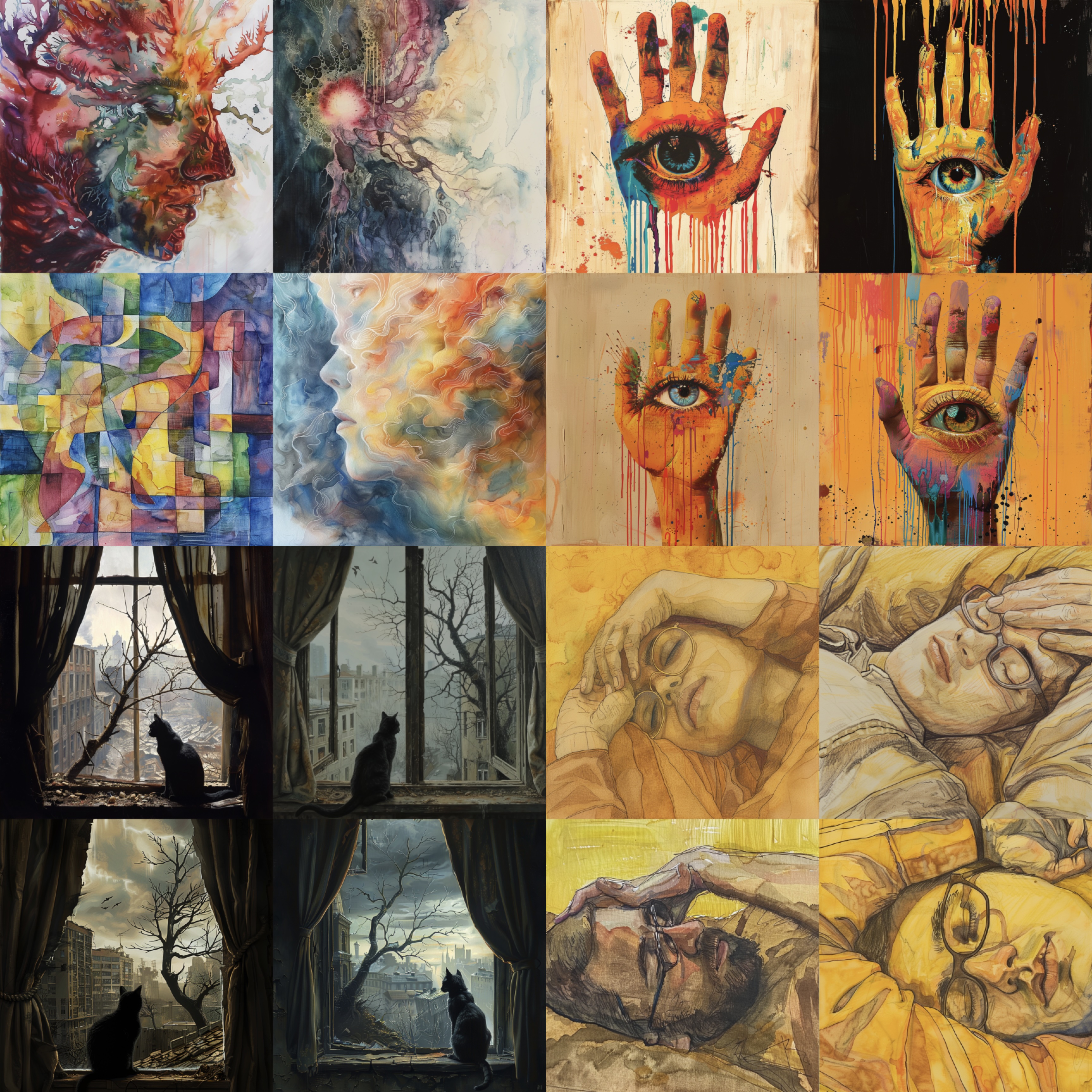

When I try to create similar kinds of images with what I believe to be the state-of-the-art text-to-image system today, Midjourney v6, here’s what I get with similar prompts.

These images are beautiful in their own right, and in their detail and realism they are impressive. I’m regularly stunned by the quality and realism in images generated by Midjourney. This isn’t meant to be another “AI-generated images aren’t artistic” post.

However, there is a very obvious difference in the styles of these two systems. After having generated a few hundred images from both systems, I find DALL-E 2 to be regularly:

- surprising and creative with its use of color and contrast;

- usually focused on a single subject, with one or few things going on in the frame;

- quite inventive in how it interprets ambiguous or open-ended prompts, often bringing in varied appropriate subjects on its own.

By contrast, Midjourney’s images are biased towards:

- a lot of detail all the time, almost as if the system really wants to put every single pixel to use;

- pretty uniform, flat use of color compared to DALL-E 2’s;

- literal interpretations of the prompt, with a tendency to have the image always be “about” something concrete with little room for ambiguity.

Though Midjourney v6 is the most capable system like this in my experience, I encounter these same stylistic biases when using any modern model from the last couple of years, like Stable Diffusion XL and its derivatives, Google’s Imagen models, or even the current version of DALL-E (DALL-E 3). This is a shame, because I really like the variety and creativity of outputs from DALL-E 2, and it seems no modern systems are capable of reproducing similar results.

I’ve also done some head-to-head comparisons, including giving Midjourney examples of images from which it could transfer the style. Though Midjourney v6 successfully copies the original image’s style, it still has the hallmark richness in detail, as well as a clear tendency towards concrete subjects like realistic human silhouettes:

Though none of this is a scientifically rigorous study, I’ve heard similar sentiments from other users of these systems, and observed similar “un-creative” behavior from modern language models like ChatGPT. In particular, I found this study of distribution of outputs before and after preference tuning on Llama 2 models interesting, because I think they successfully quantify the bland “ChatGPT voice” and show some concrete ways that reinforcement learning has produced accidental attractors in the model’s output space.

Why does this happen?

There are a few major differences between DALL-E 2 and other systems that we could hypothetically point to:

- They’re trained on very different datasets.

- DALL-E 2 never underwent any preference tuning, where the other models have.

- DALL-E 2 is the only pixel-space image diffusion model still in wide use. All other models perform diffusion in a compressed latent space, which may hamper things like the diversity of color palette.

After thinking about it a bit and playing with some open source models, I think there are two big things going on here.

The first is that humans simply prefer brighter, more colorful, more detailed images when asked to pick a “better” visual in a side-by-side comparison, even though they would not necessarily prefer a world in which every single image was so hyper-detailed and hyper-colorful. So when models are tuned to human preferences, they naturally produce these hyper-detailed, hyper-colorful sugar-pop images.

The second is that when a model is trained using a method with feedback loops like reinforcement learning, it tends towards “attractors”, or preferred modes in the output space, and stops being an accurate model of reality in which every concept is proportionately represented in its output space. Preference tuning tunes models away from being accurate reflections of reality into being greedy reward-seekers happy to output a boring response if it expects the boring output to be rated highly.

Let’s investigate these ideas in more detail.

1. Are we comparing outputs, or comparing worlds?

If you’ve ever walked into an electronics store and looked at a wall full of TVs or listened to headphones on display, you’ll notice they’re all tuned to the brightest, loudest, most vibrant settings. Sometimes, the colors are so vibrant they make pictures look a bit unrealistic, with perfectly turquoise oceans and perfectly tan skin.

In general, when asked to compare images or music, people with untrained eyes and ears will pick the brightest images and the loudest music. Bright images create the illusion of vibrant colors and greater detail, and make other images that are less bright seem dull. Loud music has a similar effect, giving rise to a “loudness war” on public radio where tracks compete to catch listeners’ attention by being louder than other tracks before or after it.

Now, we are also in a loudness war of synthetic media.

Another way to think about this phenomenon is as a failure to align what we are asking human labelers to compare with what we actually want to compare.

When we build a preference dataset, what we should actually be asking is, “Is a world with a model trained on this dataset preferable to a world with a model trained on that dataset?” Of course, this is an intractable question to ask, because doing so would require somehow collecting human labels on every possible arrangement of a training dataset, leading to a combinatorial explosion of options. Instead, we approximate this by collecting human preference signals on each individual data point. But there’s a mismatch: just because humans prefer a more detailed image in one instance doesn’t mean that we’d prefer a world where every single image was maximally detailed.

2. Building attractors out of world models

Preference tuning methods like RLHF and DPO are fundamentally different from the kind of supervised training that goes on during model pretraining or a “basic” fine-tuning run with labelled data, because methods like RL and DPO involve feeding the model’s output back into itself, creating a feedback loop.

Whenever there are feedback loops in a system, we can study its dynamics — over time, as we iterate towards infinity, does the system settle into some state of stability? Does it settle into a loop? Does it diverge, accelerating towards some limit?

In the case of systems like ChatGPT and Midjourney, these models appear to converge under feedback loops into a few attractors, parts of the output space that the model has deemed reliably preferred, “safe” options. One attractor, for example, is a hyper-realistic detailed style of illustration. Another seems to be a fondness for geometric lines and transhumanist imagery when asked to generate anything abstract and vaguely positive.

I think recognizing this difference between base models and feedback-tuned models is important, because this kind of a preference tuning step changes what the model is doing at a fundamental level. A pretrained base model is an epistemically calibrated world model. It’s epistemically calibrated, meaning its output probabilities exactly mirror frequency of concepts and styles present in its training dataset. If 2% of all photos of waterfalls also have rainbows, exactly 2% of photos of waterfalls the model generates will have rainbows. It’s also a world model, in the sense that what results from pretraining is a probabilistic model of observations of the world (its training dataset). Anything we can find in the training dataset, we can also expect to find in the model’s output space.

Once we subject the model to preference tuning, however, the model transforms into something very different, a function that greedily and cleverly finds a way to interpret every input into a version of the request that includes elements it knows is most likely to result in a positive rating from a reviewer. Within the constraints of a given input, a model that’s undergone RLHF is no longer an accurate world model, but a function whose sole job is to find a way to render a version of the output that’s super detailed, very colorful, extremely polite, or whatever else the model has learned will please the recipient of its output. These reliably-rewarded concepts become attractors in the model’s output space. See also the apocryphal story about OpenAI’s model optimized for positive outputs, resulting in inescapable wedding parties.

Today’s most effective tools for producing useful, obedient models irreversibly take away something quite valuable that base models have by construction: its epistemic calibration to the world it was trained on.

Interpretable models enable useful AI without mode collapse

Though I find any individual output from ChatGPT or Midjourney useful and sometimes even beautiful, I can’t really say the same about the possibility space of outputs from these models at large. In tuning these models to our pointwise preferences, it feels like we lost the variety and creativity that enable these models to yield surprising and tasteful outputs.

Maybe there’s a way to build useful AI systems without the downsides of mode collapse.

Preference tuning is necessary today because of the way we currently interact with these AI systems, as black boxes which take human input and produce some output. To bend these black boxes to our will, we must reprogram their internals to want to yield output we prefer.

But there’s another growing paradigm for interacting with AI systems, one where we directly manipulate concepts within a model’s internal feature space to elicit outputs we desire. Using these methods, we no longer have to subject the model to a damaging preference tuning process. We can search the model’s concept space directly for the kinds of outputs we desire and sample them directly from a base model. Want a sonnet about the future of quantum computing that’s written from the perspective of a cat? Locate those concepts within the model, activate them mechanistically, and sample the model outputs. No instructions necessary.

Mechanistic steering like this is still early in research, and for now we have to make do with simpler tasks like changing the topic and writing style of short sentences. But I find this approach very promising because it could give us a way to make pretrained models useful without turning them into overeager human-pleasers that fall towards an attractor at the first chance they get.

Furthermore, sampling directly from a model’s concept space allows us to rigorously quantify qualities like diversity of output that we can’t control well in currently deployed models. Want variety in your outputs? Simply expand the radius around which you’re searching in the model’s latent space.

This world — directly interacting with epistemically calibrated models — isn’t incompatible with Midjourney-style hyper-realistic hyper-detailed images either. Perhaps when we have in our hands a well-understood, capable model of the world’s images we’ll find not only all the abstract images from DALL-E 2 and all the intricate illustrations from Midjourney, but an uncountable number of styles and preferences in between, as many as we have time to enumerate as we explore its vast space of knowledge.

Create things that come alive →

I share new posts on my newsletter. If you liked this one, you should consider joining the list.

Have a comment or response? You can email me.