This post is a read-through of a talk I gave at a demo night at South Park Commons in July 2024.

I’ve spent my career investigating how computers could help us not just store the outputs of our thinking, but actively aid in our thinking process. Recently, this has involved building on top of advancements in machine learning.

In Imagining better interfaces to language models, I compared text-based, conversational interaction paradigms powered by language models to command-line interfaces, and proposed a new direction of research that would be akin to a GUI for language models, wherein we could directly see and manipulate what language models were “thinking”.

In Prism, I adapted some recent breakthroughs in model interpretability research to show how we can “see” what a model sees in a piece of input. I then used interpretable components of generative models to decompose various forms of media into their constituent concepts, and edit these documents and media in semantic latent space by manipulating the concepts that underlie them.

Most recently, in Synthesizer for thought, I began exploring the rich space of interface possibilities opened up by techniques like feature decomposition and steering outlined in the Prism project. These techniques allow us to understand and create media in entirely new ways at semantic and stylistic levels of abstraction.

These explorations were based on the premise that foundational understanding of pieces of media as mathematical objects opens up new kinds of science and creative tools based on our ability to study and imagine these forms of media at a deeper more complete level:

- Before advanced understanding of optics and our sense of sight, we referred to color by their name: red, blue, amber, turquoise. This was imprecise and ad-hoc. With improved science of light and our visual perception came a mathematical model of color and vision. We began to reason about color and light as mathematical objects: waves with frequencies and elements of a geometric space — color spaces, like RGB, HSL, YCrCb. This, combined with mechanical instruments to decompose and synthesize light into and out of its mathematical representation, gave us better creative tools, richer vocabulary, and a way to systematically map and explore a fuller space of colors.

- Before advanced understanding of sound and hearing, we created music out of natural materials – rubbing strings together, hitting things, blowing air through tubes of various lengths. As we advanced our understanding of the physics of sound, we could imagine new kinds of sounds as mathematical constructs, and then conjure them into reality, creating entirely new kinds of sounds we could never have created with natural materials. We could also sample sounds from the real world and modulate its mathematical structure. Not only that, backed by our mathematical model of sound, we could systematically explore the space of possible sounds and filters.

Interpretable language models can give us a similar foundational understanding of ideas and thoughts as mathematical objects. With Prism, I demonstrated how by treating them as mathematical objects, we can similarly break down and recompose sentences and ideas.

Today, I want to share with you some of my early design explorations for what future creative thinking tools may look like, based on these techniques and ideas. This particular exploration involves what I’ve labelled a computational notebook for ideas. This computational notebook is designed based on one cornerstone principle: documents aren’t a collection of words, but a collection of concepts.

Intro – Here, I’ve been thinking about a problem very dear to my heart. I want to explore creating a community of researchers interested in the future of computing, AI, and interface explorations. I simply write that thought in my notebook.

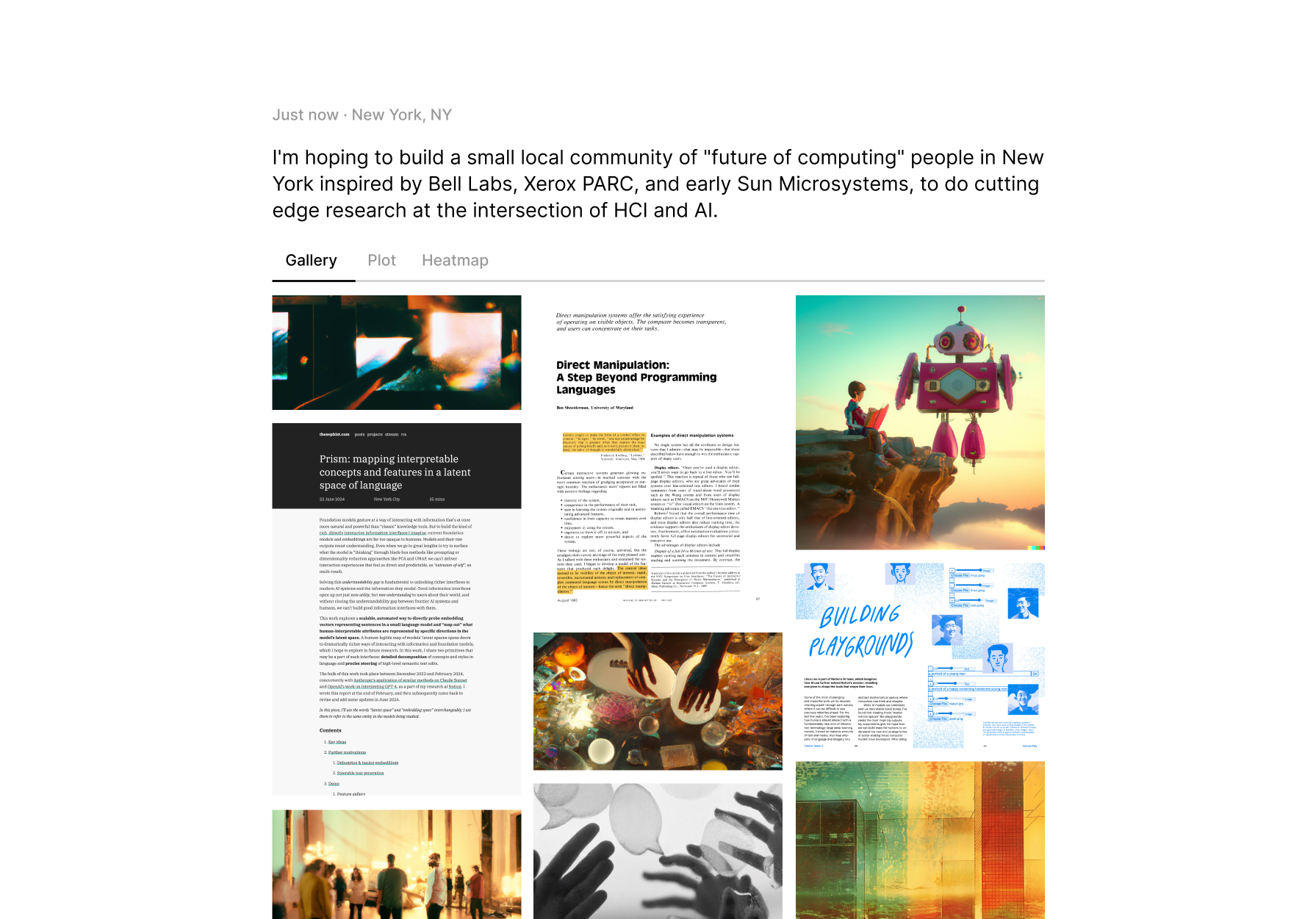

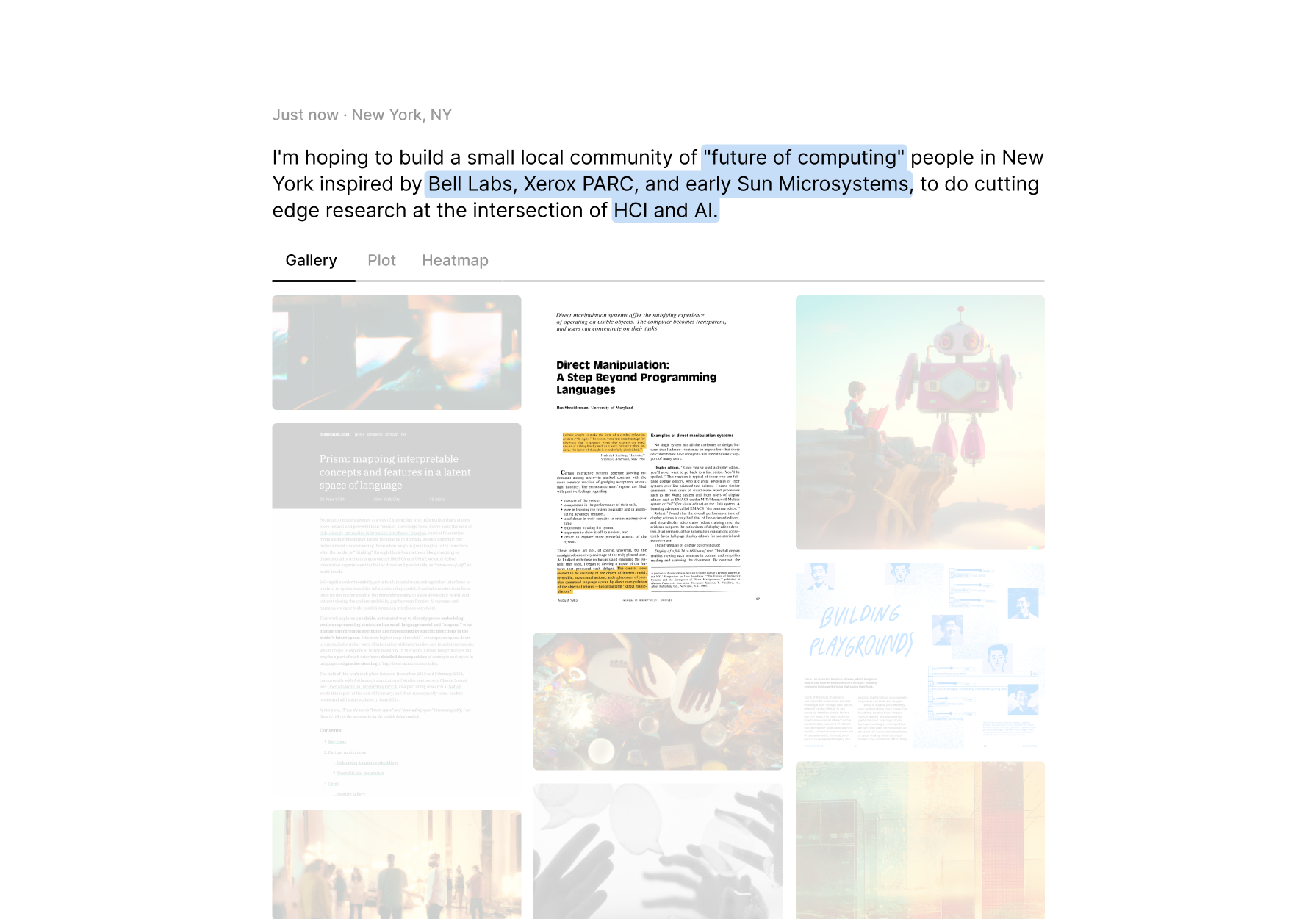

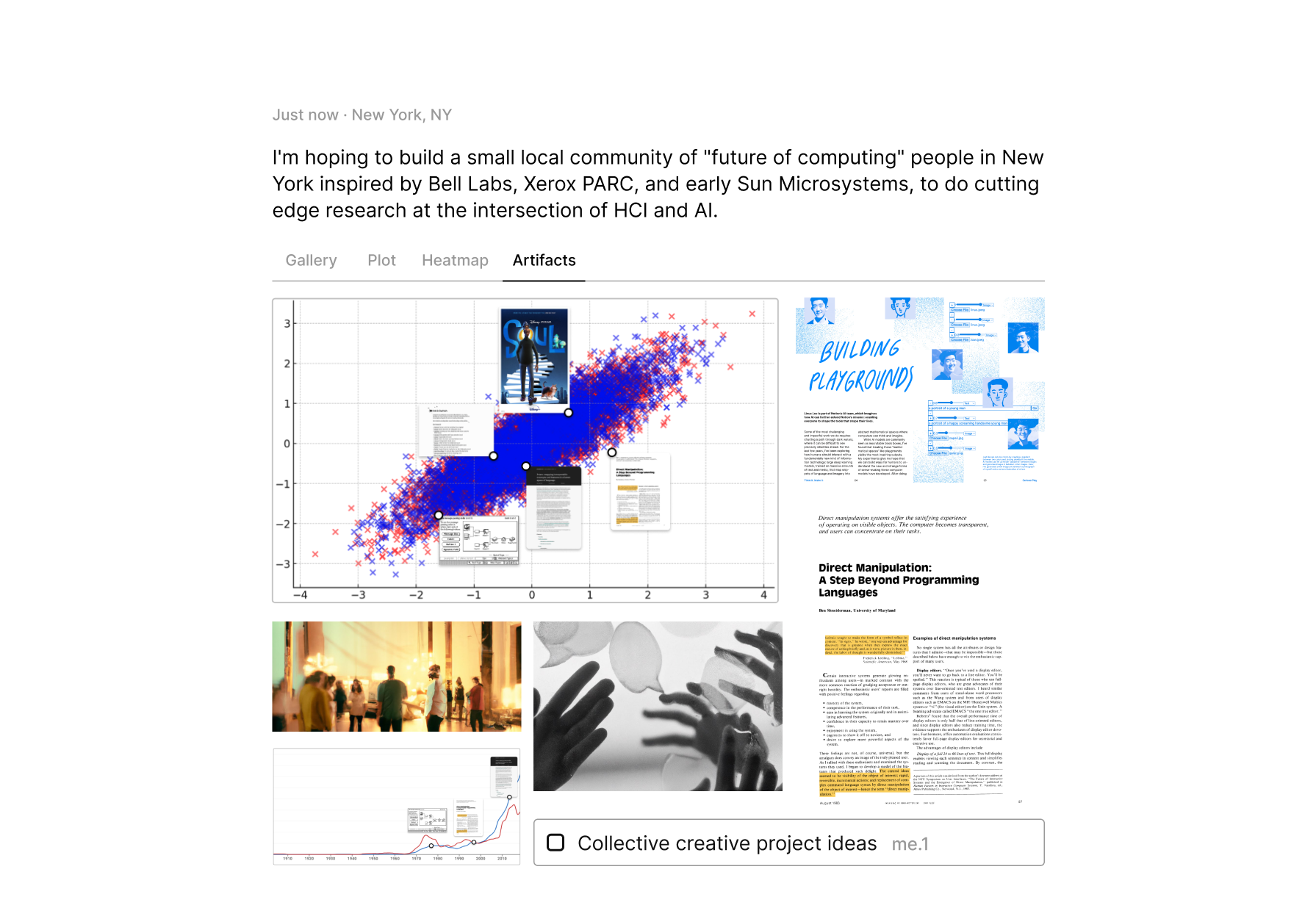

Documents – When we think within our minds, a thought conjures up many threads of related ideas. In this notebook, writing down a thought brings up a gallery of related pieces of media, from images to PDFs to simple notes. These documents may be fetched from my personal library of collected documents, or from the wider Web.

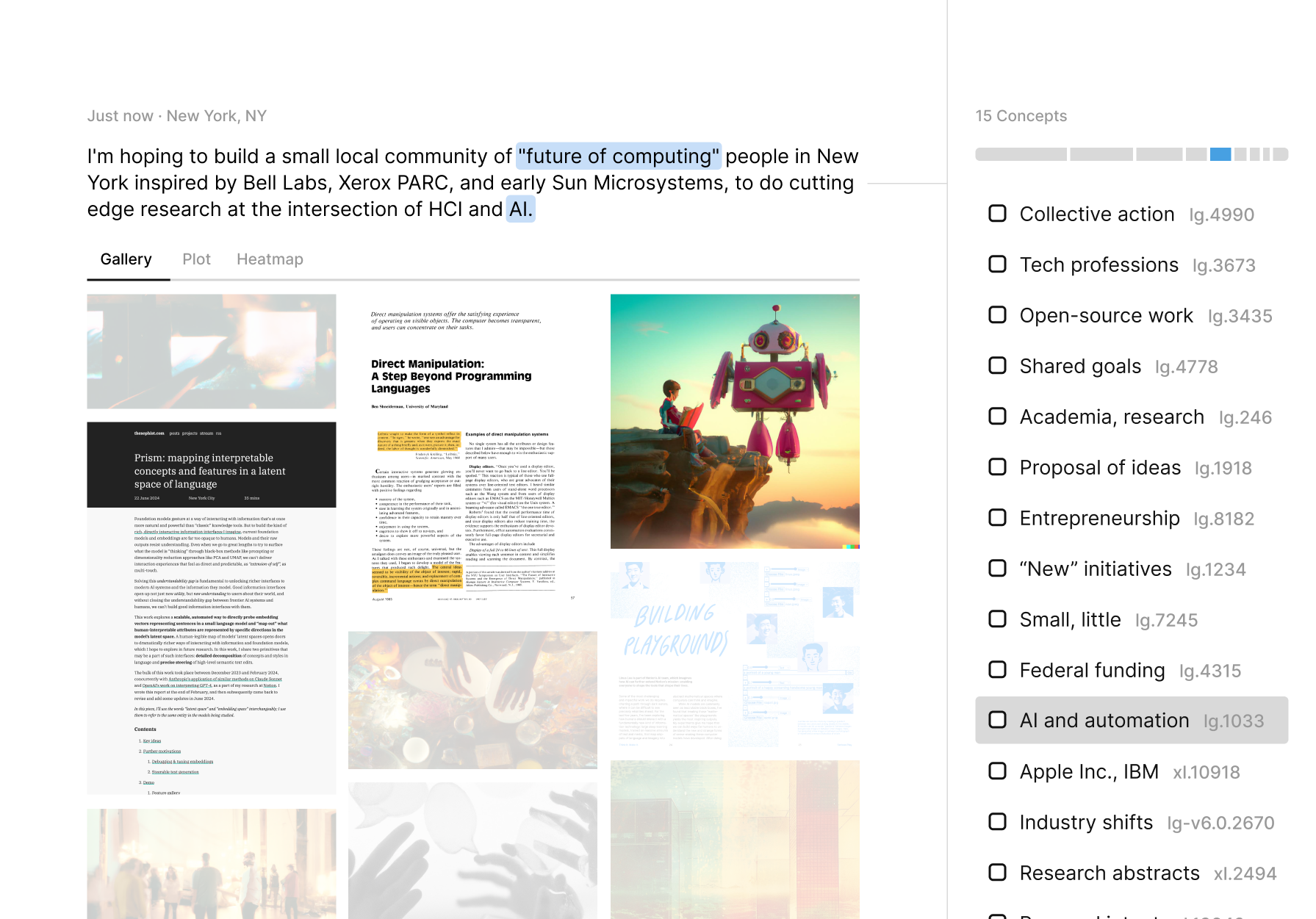

But unlike many other tools with similar functionality, this notebook treats documents as a set of ideas. So in addition to what media are similar, we can see which concepts they share. As we hover over different documents, we can see what part of our input recalled that document.

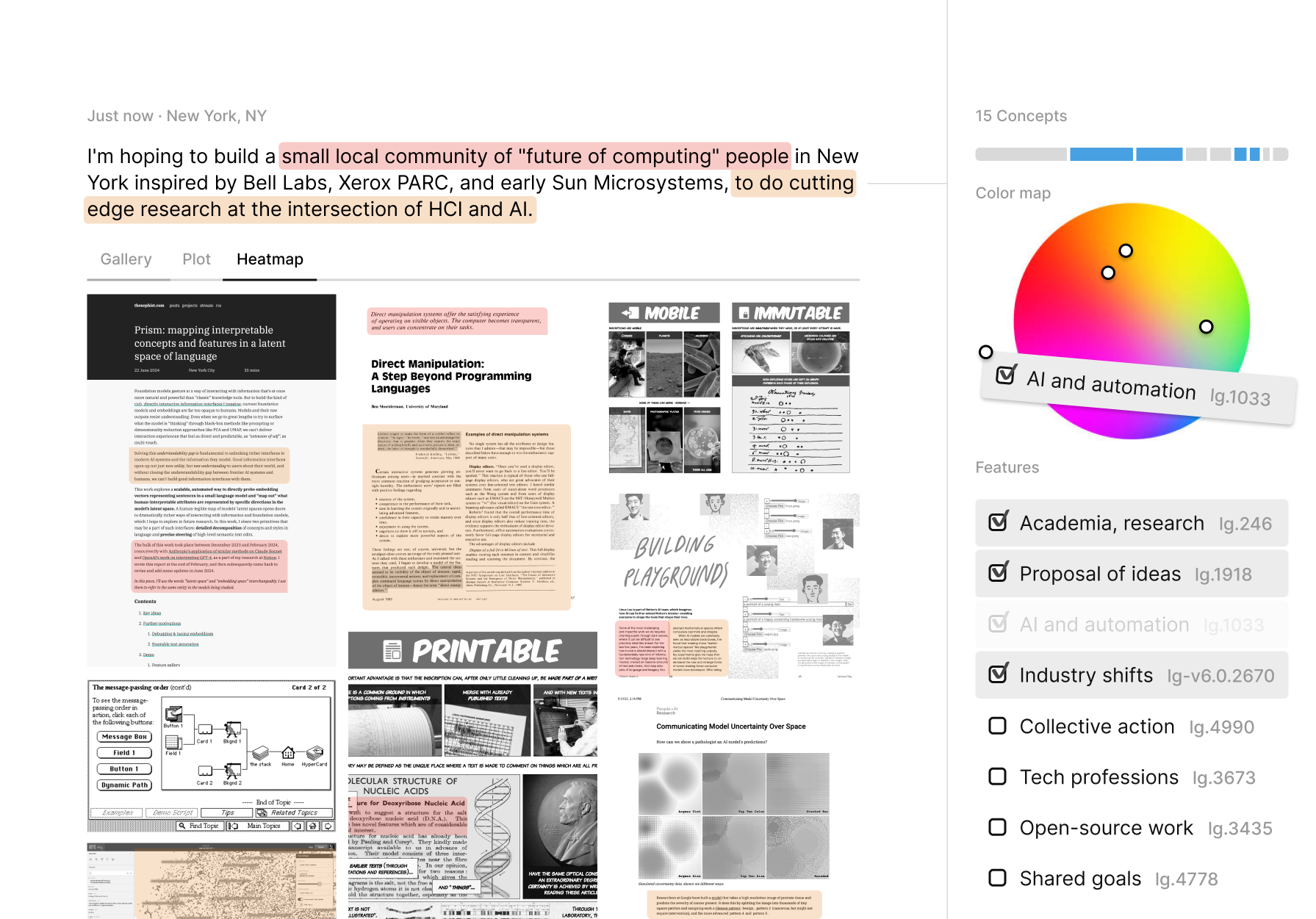

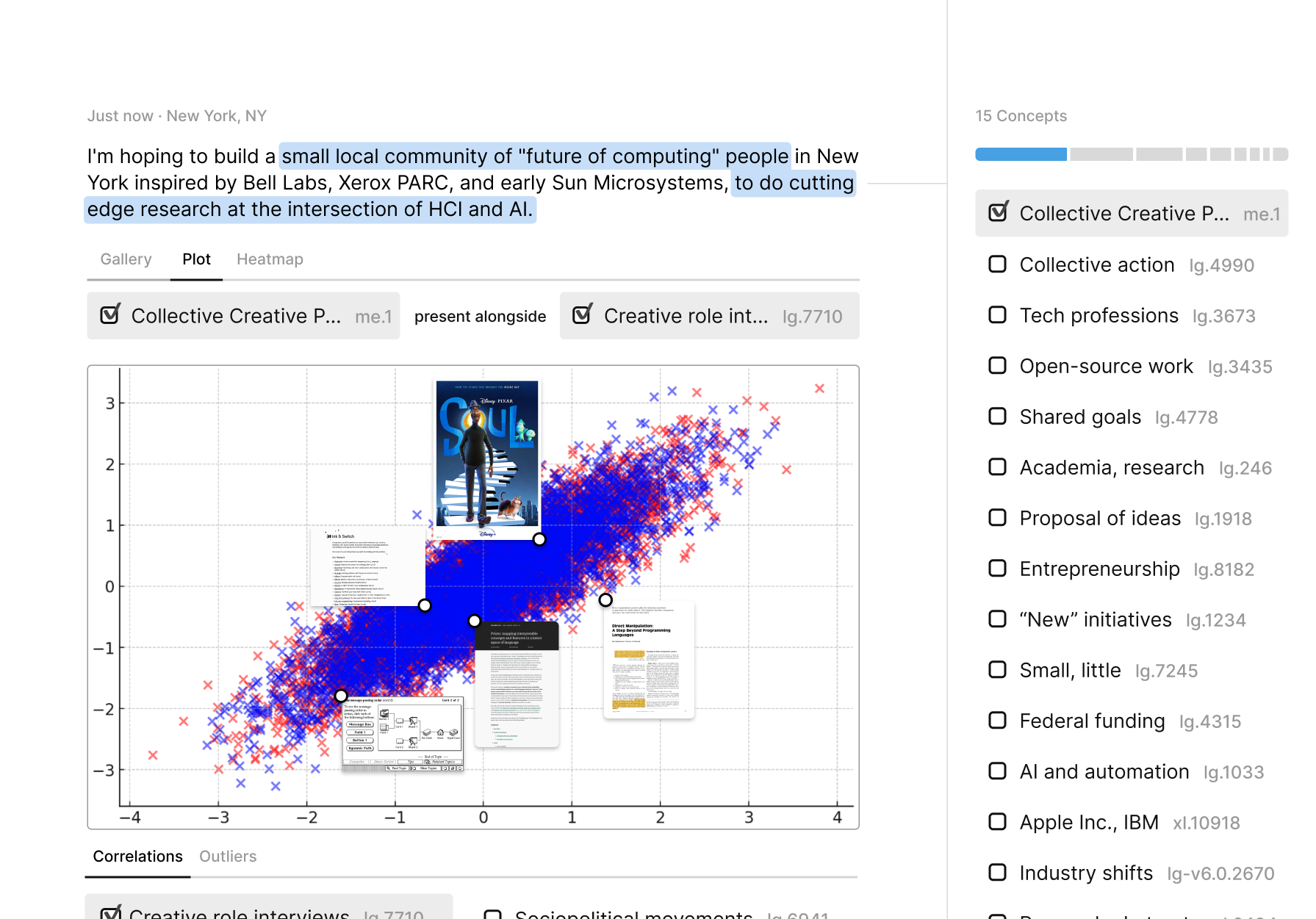

Concepts – We’ve pivoted around our idea space anchored on documents. We can instead pivot around concepts. To do this, we open our concepts sidebar to see a full list of features, or concepts, our input contains. This view is like a brain scan of a model as it reads our input, or a DNA reading of our thought.

As we hover over different concepts in our input, we can see which pieces of media in our library share that particular concept.

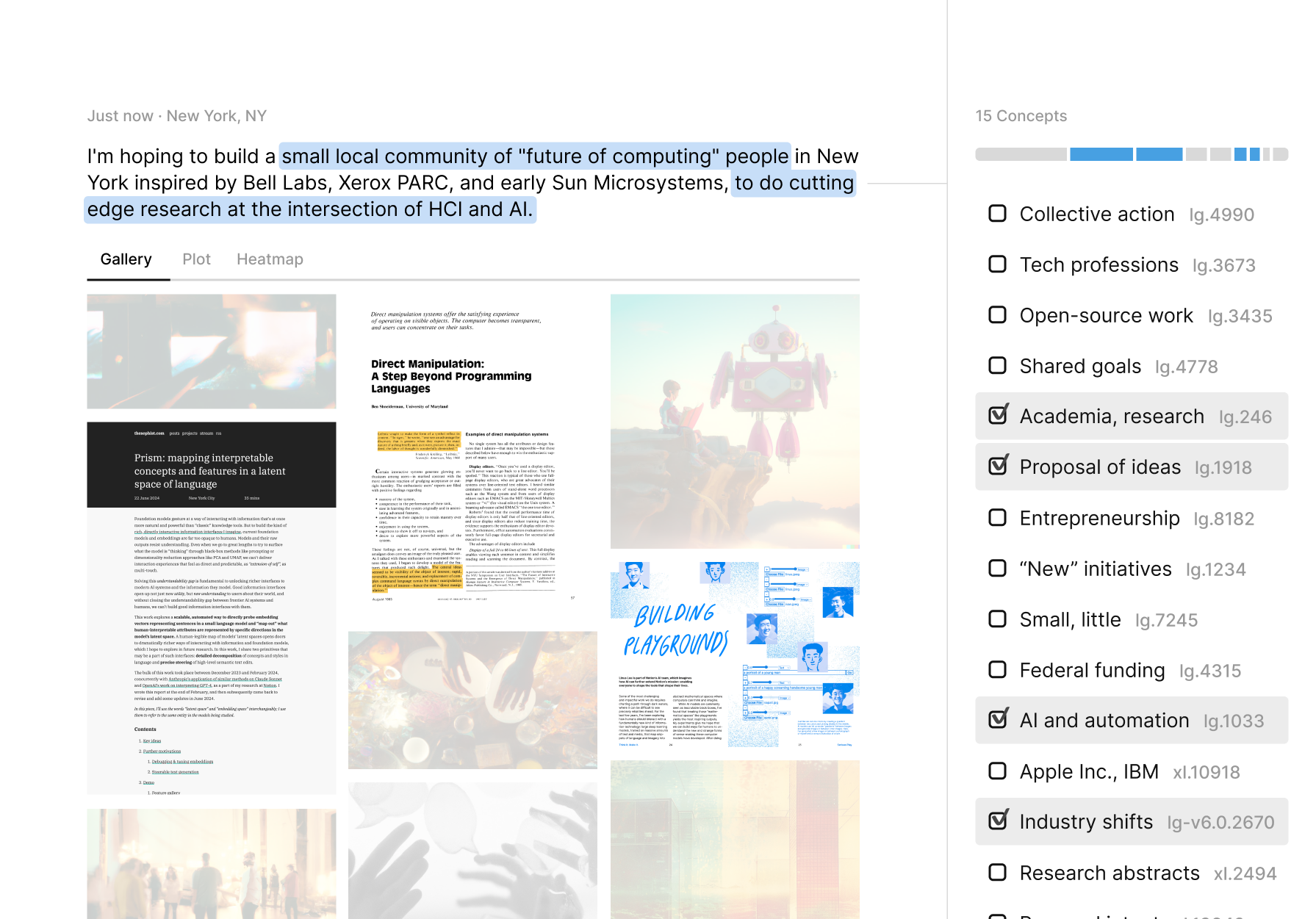

Composition – A key part of thinking is inventing new ideas by combining existing ones. In this story, I’m interested in both large industrial research bets and AI. By selecting these concepts at once, we can see which pieces of media express this new, higher-level concept.

Heatmap – We can get an even more detailed view of the relationships between these individual concept components using a heatmap. In this view, we assign different colors to disparate concepts and see the way they co-occur in our media library. Through this view, we can not only discover documents that contain these concepts together, but also find deeper relationships like, perhaps, that many papers on AI and automation later discuss the idea of industrial shifts.

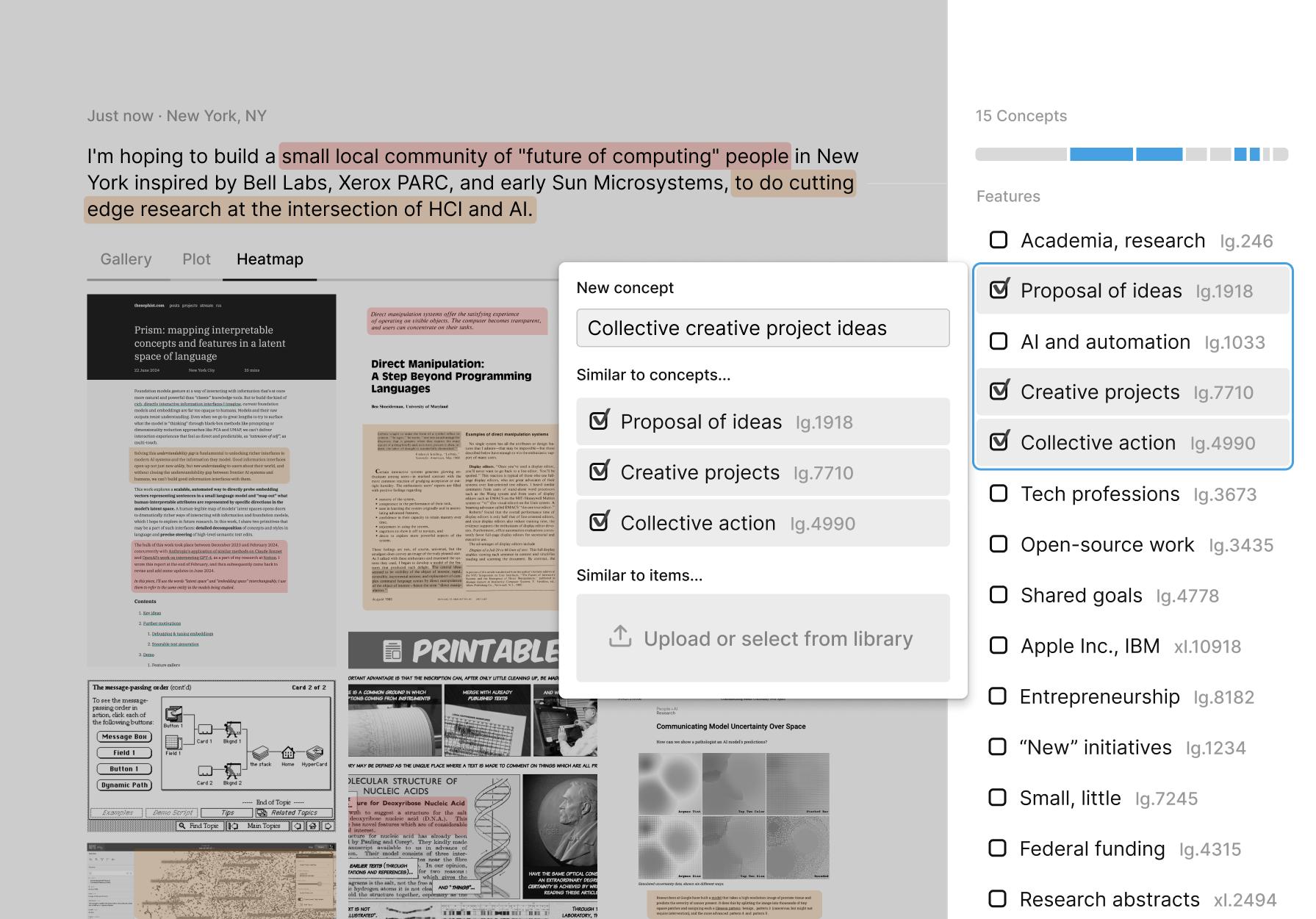

Abstraction – If we find a composition of ideas we like, such as for example these three concepts about large-scale creative collaboration, we can select and group them into a new, custom concept, which I’ll call collective creative project ideas.

This workflow, in which detailed exploration of existing knowledge leads us to come up with a new idea, is a key part of the creative and scientific process that current AI-based tools can’t capture very well, because existing tools rarely let you see a body of knowledge through the lens of key ideas.

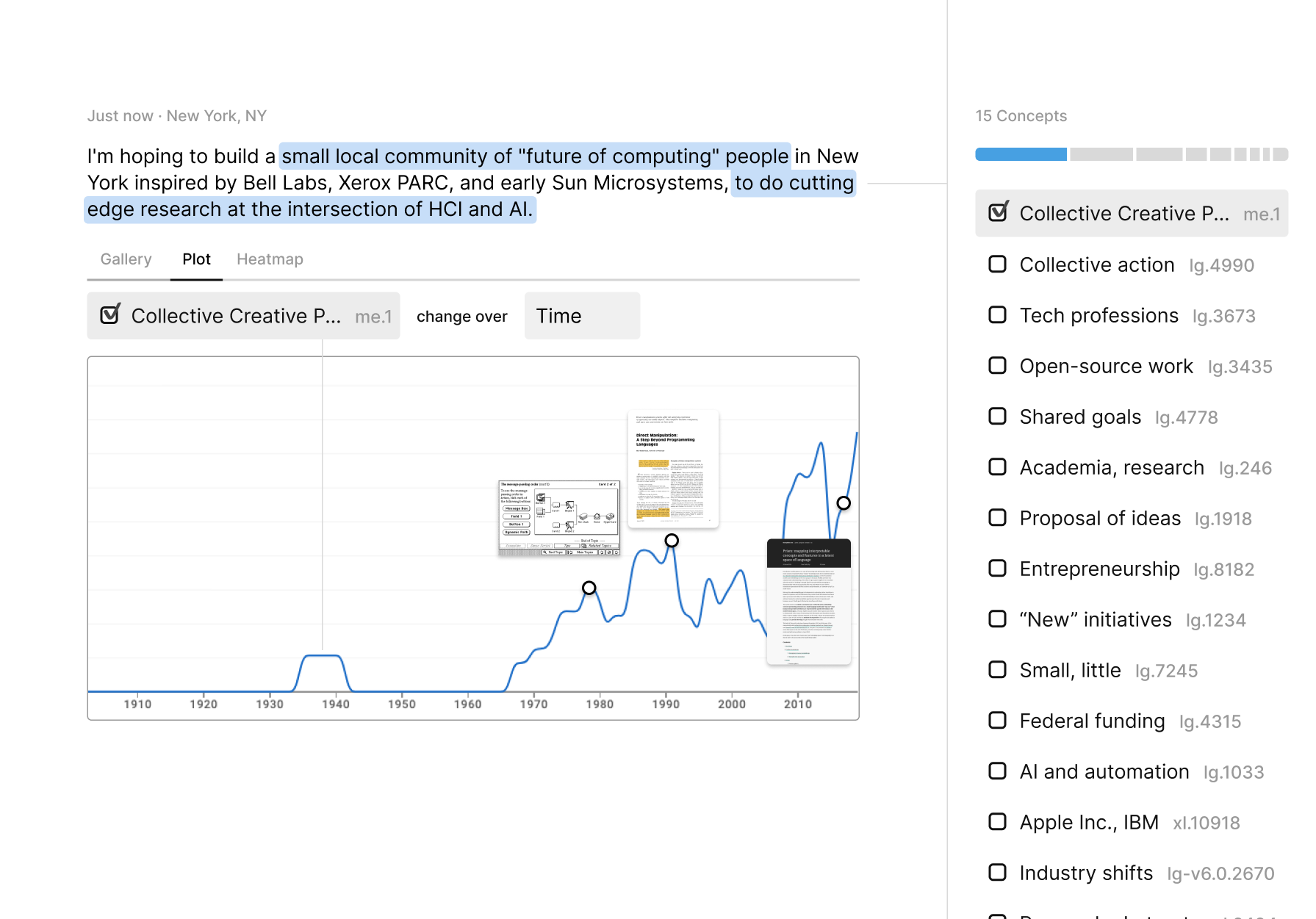

Visualization – Now that we have a new lens in the form of this custom concept, we can explore our entire media library through this lens using data graphics. We can see the evolution and prominence of our new concept over time, for example.

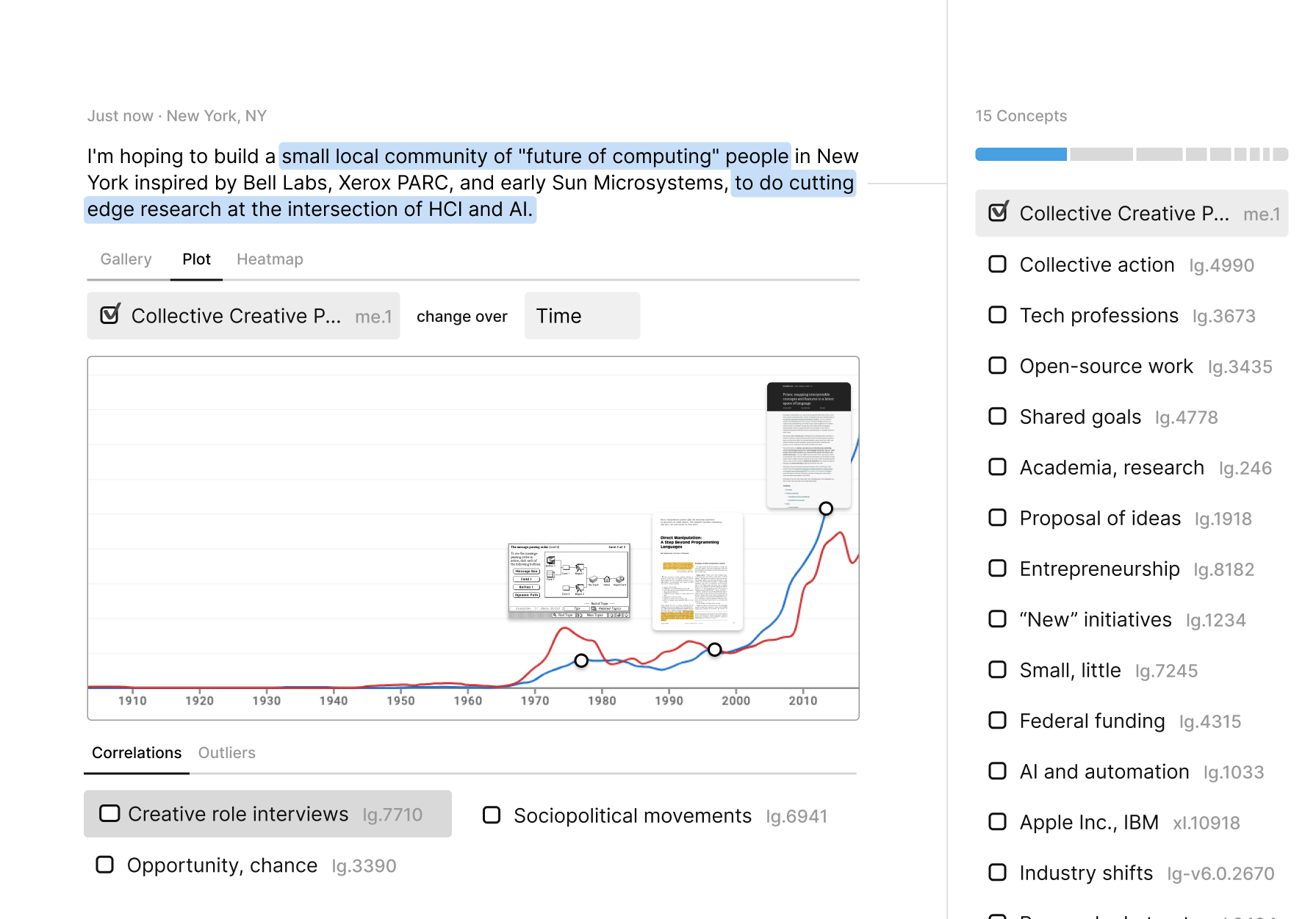

Surprise – We can compare the relationship and co-evolution of this idea with another one in our dataset, perhaps this concept related to interviews with creative leaders like film directors. This relationship is something I personally discovered in my real concept dataset while preparing this talk, and was a surprising relationship between ideas I didn’t expect.

The ability for knowledge tools to surprise us with new insight is fundamental to the process of discovery, and too often ignored in AI-based knowledge tools, which largely rely on humans asking precise, well-formed questions about data.

Based on this clue, we may choose to examine this relationship more deeply, with a scatter plot. Notice that by treating documents as collections of ideas rather than words, we can benefit from the well-established field of data graphics to study an entirely new universe of documents and unstructured ideas.

Conclusion – So, there we have it. A tool that lets us discover new concepts and relationships between ideas, and use them to see our knowledge and our world through a new lens.

Good human interfaces let us see the world from new perspectives.

I really view language models as a new kind of scientific and creative instrument, like a microscope for a mathematical space of ideas. And as our understanding of this mathematical space and our instrument improves, I think we’ll see rapid progress in our ability to craft new ideas and imagine new worlds, just as we’ve seen for color and music.

← Create things that come alive

A beginner’s guide to exploration →

I share new posts on my newsletter. If you liked this one, you should consider joining the list.

Have a comment or response? You can email me.